In his articles for ArchSmarter, Michael Kilkelly often praises the value of computers and automation, a sometimes controversial viewpoint with plenty of supporters on either side. In particular, his previous post on ArchDaily, "5 Reasons Architects Should Learn to Code" provoked a significant discussion. But what is the value of this automation? In this post originally published on ArchSmarter, he expands on his view of what computers can be useful for - and more importantly, what they can't.

I write a lot about digital technology and automation here on ArchSmarter, but deep down inside, I have a soft spot for all things analog. I still build physical models. I carry a Moleskine notebook with me everywhere. I also recently bought a Crosley record player.

I can listen to any kind of music I want through Spotify. The music world is literally at my finger tips. Playing records hasn’t changed what I listen to but it has changed how I listen to music. There’s more friction involved with records. I have to physically own the record and I have to manually put it on the turntable. It’s a deliberate act that requires a lot more effort than just selecting a playlist on Spotify. And it’s a lot more fun.

This split between the physical and the digital was much on my mind while I read Nicholas Carr’s new book The Glass Cage: Automation and Us. Carr’s premise is that while digital technology give us a lot in terms of efficiency and convenience, it takes away even more. The increasing use of automation is distancing us from our essential human characteristic; the link between our hands and our minds. As Carr states, “Automation weakens the bond between tool and user not because computer-controlled systems are complex but because they ask so little of us.”

So are computers bad for architecture? Carr dedicates a whole chapter of the book to this question. There’s no doubt that computers have become essential to the practice of architecture. BIM and CAD software has made firms more efficient and has sped up the construction document process. I don’t think anyone wants to return to the days of hand drafting and ammonia blue-line machines.

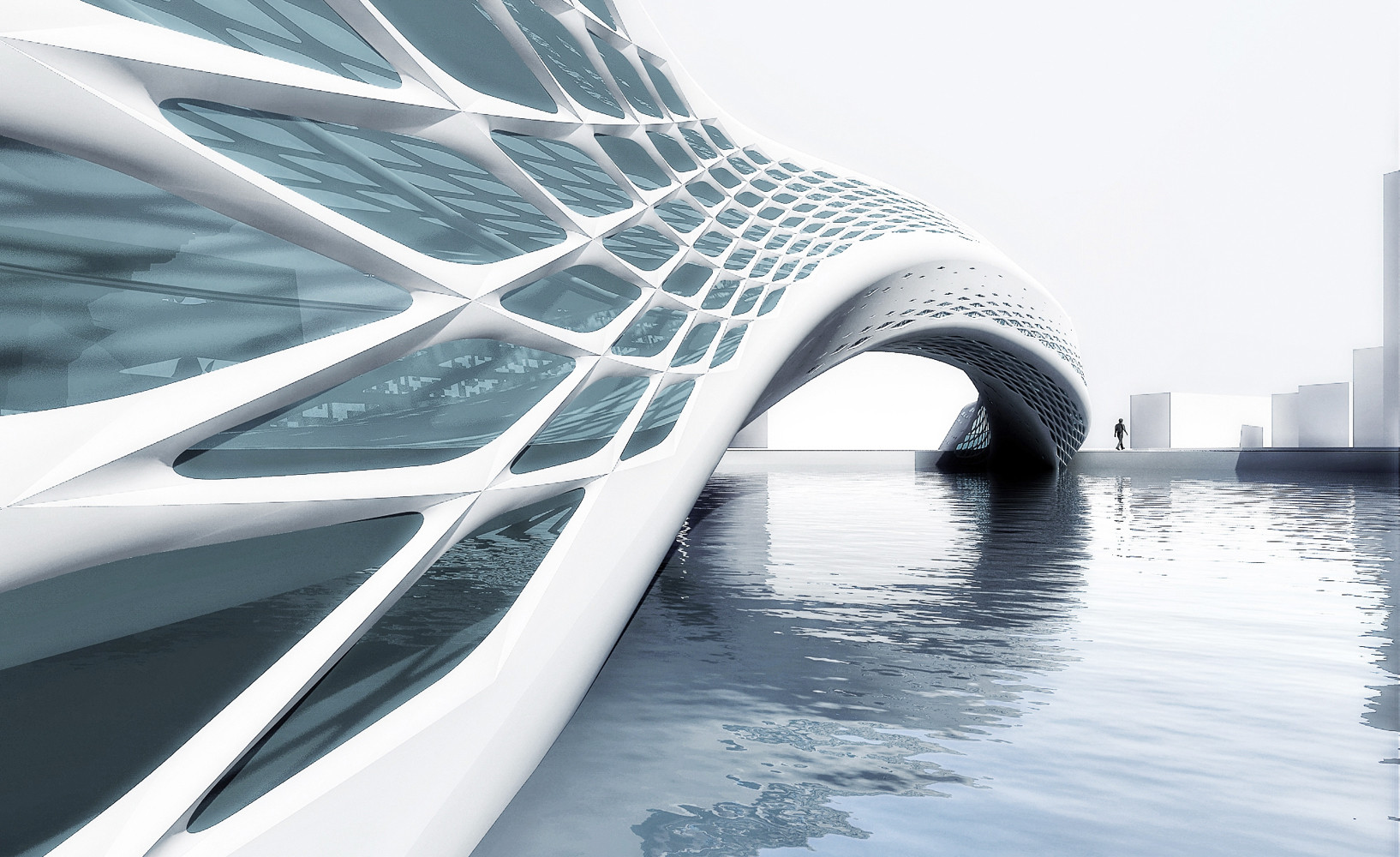

However, what happens when we give more control of the design process over to the computer? As Carr states, “... the very speed and exactitude of the machine may cut short the messy and painstaking process of exploration that gives rise to the most inspired and meaningful designs.” Can tweaking parameters in a 3D model provide the same kind of dialog with a design as sketching or building a model? Carr references British design scholar Nigel Cross who states that “Sketching enables exploration of the problem space and the solution space to proceed together”. Simultaneously thinking through the problem and the solution is intricately tied to the medium you’re using.

Contrast designing by hand with designing in the computer with a parametric model. A parametric approach works when the problem is well understood. The relationship between form and parameters increases in complexity as the model develops. Rebuilding a model to account for newly discovered relationships takes time. At early stages of design, however, the problem you’re solving is often loosely formed and not always understood. You learn what you’re trying to solve while you’re solving it. As such, you need to move fast and iterate quickly. You need to work abstractly. The precision demanded by the computer isn’t always conducive to this type of work.

With that in mind, it’s interesting to see Google’s first foray into the AEC space with Flux. Google wants to take the parametric model even further and remove the designer from the equation. Flux is Google’s attempt to improve the efficiency of the construction sector and reduce the gap between supply and projected demand for new construction over the next 40 years. In essence, Flux aims to aggregate data from multiple sources to provide a constraints-based approach to building design. With Flux’s first product, Metro, you can easily visualize zoning regulations in a developer-friendly user interface. Their ambitions are much greater, however. They plan to encapsulate a building’s design in an algorithmic “seed” that can respond to local conditions and automatically adjust itself accordingly. The same design can be replicated thousands of times. You can read more on what Flux means to the AEC industry in these great articles by Randy Duetsch and Josh Lobel.

I’m all for incorporating more data into the design process. However, at what point does the machine and the data take over the design process? When the process is optimized to optimize, who really wins? Do we get better buildings, better cities and towns when an algorithm determines the most efficient form? As Carr warns in The Glass Cage, the danger is that designers and architects will “. . . rush down the path of least resistance, even though a little resistance, a little friction, might have brought out the best in them”.

Just like my new record player has added much-welcome friction into how I listen to music, I believe in keeping friction in the design process. It creates time for reflection and thought. That said, I’m a strong proponent of automation when it removes tedious, low value work from our work day. There’s nothing to be gained by renumbering sheets in a Revit file, for example. The thinking work, the good stuff, should remain within the purview of the professional.

Design is a crucial activity that can’t be reduced to an algorithm, or if it is (a la Google Flux), it shouldn’t. It’s too important a task to leave to the machines. As Carr states in The Glass Cage, “When automation distances us from our work, when it gets between us and the world, it erases the artistry from our lives”. I think we could all benefit from a little more artistry.